Session Notes

We collected pre-existing data in the form of session notes, which are notes that writing center consultants produce after each writing center consultation and that summarize the activities and events of the session. We collected this data in such a way that they can be analyzed individually, by institution, as well as in the aggregate, cross-institutionally. Our IRB-approved project ensures that the data is aggregated and protects the anonymity of consultants and clients.

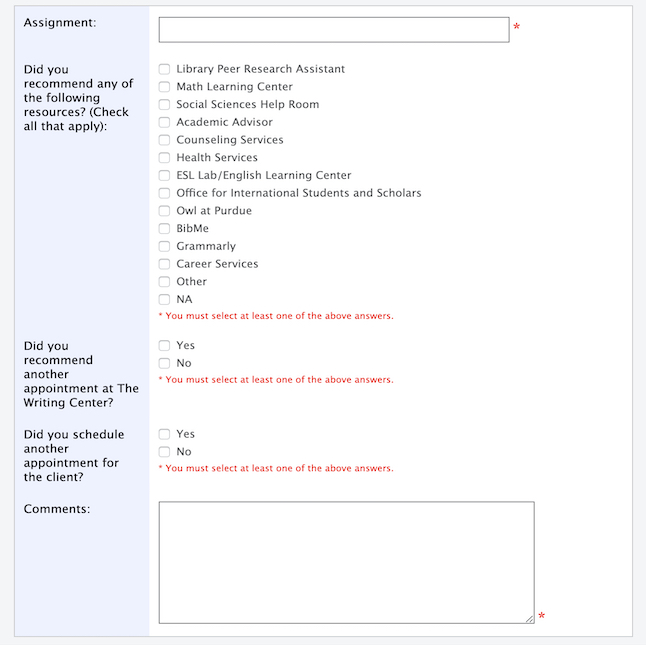

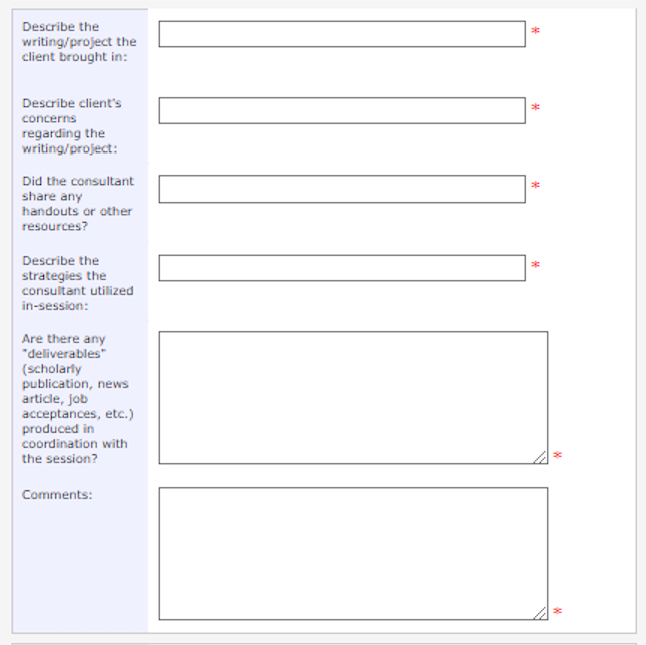

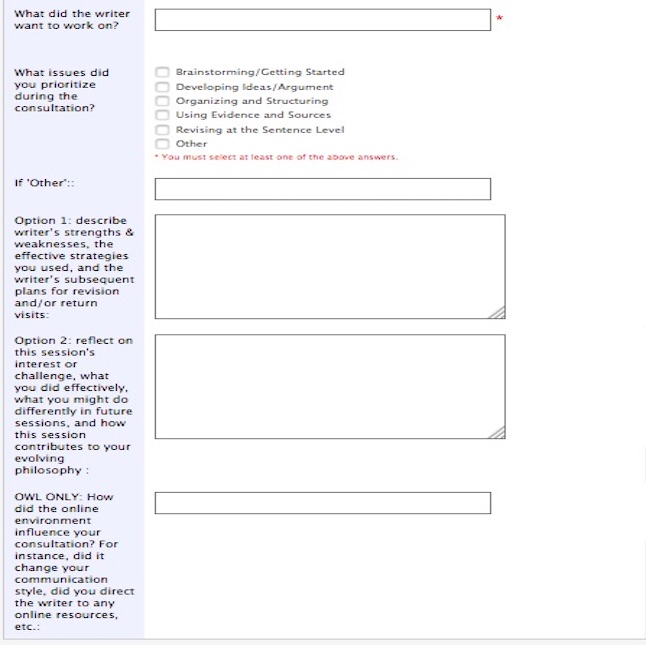

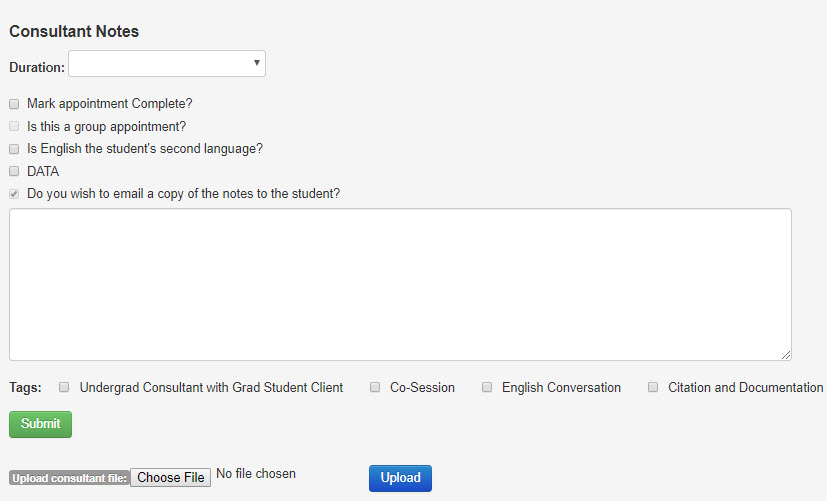

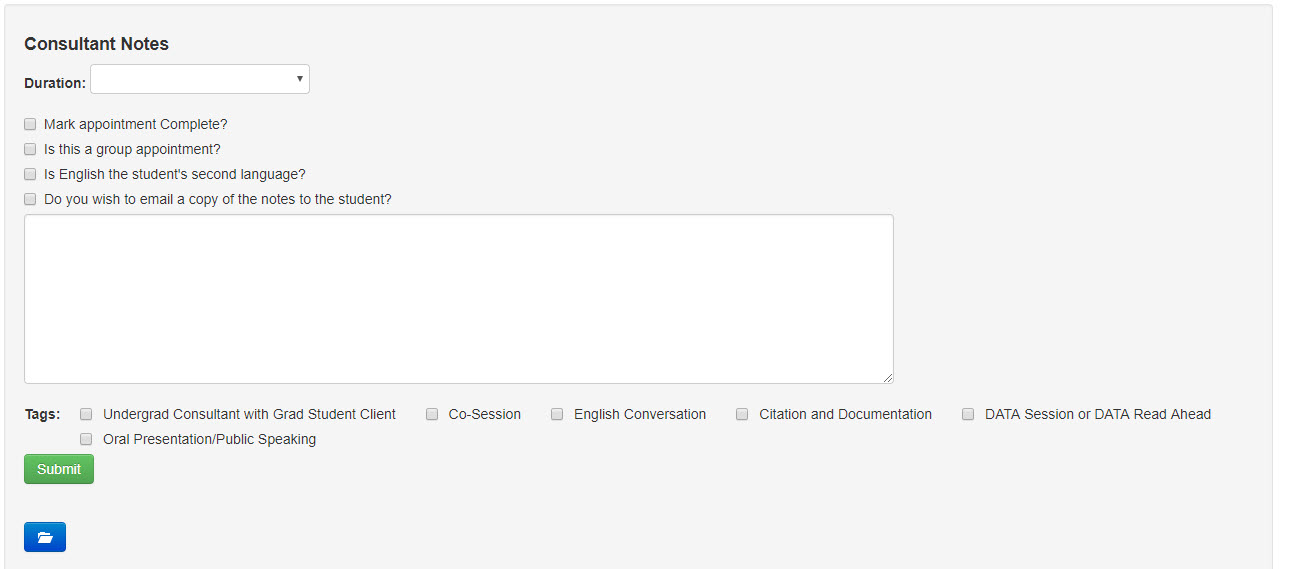

Below are images of online forms for session notes, which consultants complete after each consultation at the four institutions included in this project. Each of our institutions uses its own form to collect session notes. The term “Client Report Form” is used by the web-based platform WCOnline, which is used by many writing centers (including Michigan State University, the University of Michigan, and The Ohio State University) to manage scheduling, record keeping, and reporting. The platform is customizable and each of the three centers that use WCOnline has adapted the client report form to meet local needs and priorities. The fourth institution, Texas A&M University, uses an in-house client management software called CLEO.

Consultants complete a client report form after each session. Consultants describe the assignment and identify any referrals to other resources or additional appointments. A comment box is provided for a description of what occurred during the consultation. These notes are internal to the writing center; they can be shared with writers or instructors as requested.

Consultants complete a client report form after each session. Consultants provide information about the writer’s priorities for the session, and about resources provided to the writer. Consultants describe the tutoring strategies used during the session and identify any written products connected to the session. A comment box is provided. These notes are largely internal to the writing center staff; they are not shared externally, except when the client requests a copy.

Peer writing consultants complete a client report form after each session with a writer. Consultants provide information about the writer’s and the consultant’s priorities for the session, and then respond to one of two prompts. Option 1 invites consultants to write a note oriented primarily to other consultants describing the writer’s strengths and weaknesses, consulting strategies used, and plans for revision or return visits. Option 2 invites consultants to write a reflective note oriented to their own professional development, addressing the effectiveness of the session and what they might do differently in future consultations. Online Writing Lab (OWL) consultants are invited, in addition, to reflect on the effects of the online environment of their consultations. These notes are entirely internal to the writing center staff; they are not shared with writers or with instructors. They are reviewed by the center coordinator and director, for supervision purposes and also for professional development purposes. Our consultant staff also uses the client report forms when preparing for consultations with returning writers.

Texas A&M University uses an in-house scheduling system called CLEO. Figure 4 shows the consultant notes for an asynchronous online appointment, while Figure 5 shows consultant notes for synchronous online appointments and face-to-face appointments.

Consultants complete a client report form after each online session. Consultants review the session in the comment text box. When students submit documents for review, consultants use the “comment” feature in Microsoft Word or PDF to insert marginal comments into the document. The consultant then uploads the reviewed file and writes a summary note directly to the client in the textbox. The summary comments and the reviewed document are automatically sent to the client.

Consultants complete a client report form after each face-to-face or web conference session. Consultants review the session in the comment text box. Consultants are trained to write notes based on the structure of every session. First, they talk about the client’s concerns and preferences and manage expectations. Then, they include a narrative of the actual work done in the session, including only observable behavior and actions. Finally, they talk about the conclusion, including a debrief, next steps, or recommended resources.

Notes are not addressed to the client or automatically sent to the client upon completion. Clients can request copies of the notes, and they often do, either as a reinforcement of the concepts covered in the session or if they need proof of attendance for a required visit.

All notes are kept in CLEO, and consultants prepare for sessions by reviewing a client’s previous session notes, if they have a visit history. The notes are also reviewed three times a semester by writing center administrators and experienced tutors in supervisory roles as one way of monitoring a consultant’s performance. Therefore, the session notes at Texas A&M have three sets of stakeholders: 1) consultants; 2) students; and 3) writing center administrators.

The Corpus

Using the text entered by consultants in the comment boxes in each form, we created a combined data set, or corpus, of approximately 2 million words. To analyze the data, we used Voyant, an open-source web-based application for performing text analysis on corpora. We chose this tool because it is a web-based, open-source tool that has a low learning curve and is a tool that other writing center directors could easily access and use as we expand the project to create a larger corpus of other writing center report forms.

To yield accurate and meaningful results, Voyant automatically screens and omits “stopwords”—words that are common in language, such as articles, prepositions and conjunctions. In our initial analysis, however, we found common words particular to writing center contexts that were not functional to the meaning of the notes. These terms skewed the data results; therefore, we manually placed them in Voyant’s stopwords list. We paid close attention to “functional” terms, or words that are not recognizably seen as important for analysis but their placement in the context of the text might render them important (Potts, Bednarek, & Caple, 2015). Therefore, we reviewed stopwords that were manually eliminated from analysis to ensure data integrity, though some sections (like Cirrus and WordTree) included stop words in the final analysis, due to their common occurrence in the particular visualization.

Each of the Voyant tools we used corresponds with the author/institution of that section; in our previous publication (Giaimo et al., 2018), we conducted analysis on data from each individual institution and included findings unique to each institution.

- Cirrus: The Ohio State University

- Tables: Michigan State University

- Collocates Graph: Texas A&M University

- Word Tree: University of Michigan