Meta captioning

How embedded captions can normalize accessibility

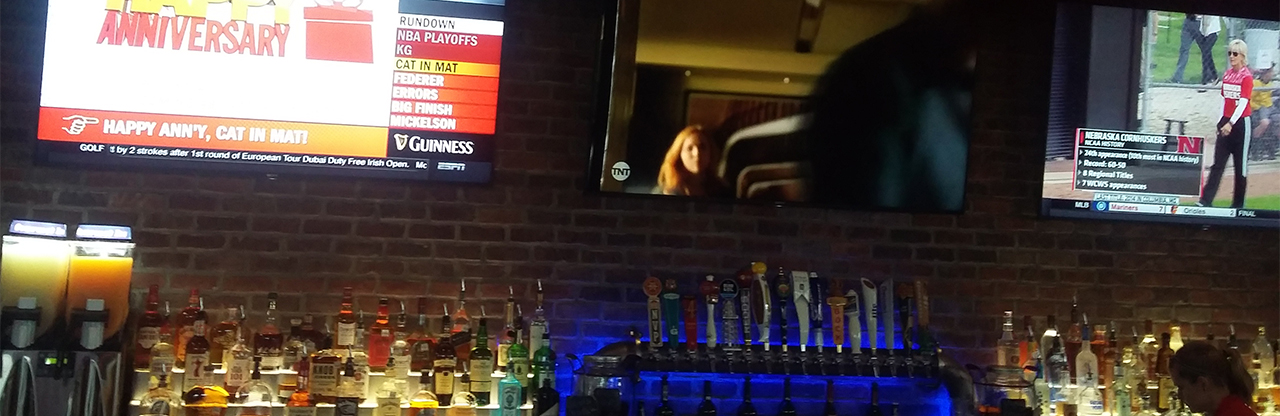

How can we increase the visibility and importance of captioning in public spaces? In U.S. bars and restaurants, TV captions are usually turned off (with the exception of screens in a few cities that must display captions to comply with recently passed city ordinances). When captions are shown in public, they tend to be live and thus more likely to be delayed, contain more errors, look unappealing (all caps, no styling), and cover the action on the sports field. Captioning advocates must fight not only to gain access to public screens but also to increase the usability of live captioning for all viewers. 2016 photo by the author taken in a bar in Lubbock, Texas. In the three television screens above the bar in this photo, none have captions enabled.

How can we increase the visibility and importance of captioning in public spaces? In U.S. bars and restaurants, TV captions are usually turned off (with the exception of screens in a few cities that must display captions to comply with recently passed city ordinances). When captions are shown in public, they tend to be live and thus more likely to be delayed, contain more errors, look unappealing (all caps, no styling), and cover the action on the sports field. Captioning advocates must fight not only to gain access to public screens but also to increase the usability of live captioning for all viewers. 2016 photo by the author taken in a bar in Lubbock, Texas. In the three television screens above the bar in this photo, none have captions enabled.

Captioning tends to be largely invisible for those who do not watch captions at home. For those who require access to captioned programming in public, special arrangements are usually needed to ensure that captioning will be available. (Which captioning or assistive listening devices does the movie theater provide? Or better yet, does the theater offer any open-captioned showings?) In other words, the default user is assumed to be a hearing, able-bodied subject. Our public spaces are built on the assumption that everyone can hear and that technologies such as captions represent special accommodations. Can we raise the profile of captioning, even slightly, by presenting it as a routine component of our digital environments? In what follows, I offer a small intervention designed to increase the visibility and normalcy of captioning. Namely, when movie and television scenes include a television set or other screen in the scene, captions should be enabled on those embedded screens. Because this intervention is concerned with screens inside screens (and multiple layers of captioning), I refer to it as meta captioning.

We don't have many opportunities in public spaces to engage with captioned media. In Lubbock, a mid-sized city in West Texas where I lived for fourteen years, I would estimate that no more than 20% (1 in 5) of the televisions in bars, restaurants, appliance stores, and waiting rooms are displayed with captions. The actual number may be closer to 5% or 10%. We don't really know for sure. No study has attempted to quantify the number of captioned televisions in public spaces in the United States, whether that number is growing over time, or how the public feels about captions, beyond anecdotal reactions posted online from hearing viewers. Only a handful of U.S. cities have passed public captioning ordinances, beginning in 2015, including Portland, Ann Arbor, and Rochester. The Portland, Oregon ordinance, touted as the first of its kind in the country, "requires that television receivers located in any part of a facility open to the general public have closed captioning activated at all times when the facility is open and the television receiver is in use" (Portland, 2015, p. 2). While captioning advocates can celebrate public captioning ordinances, it's arguable whether these laws are riding a wave of increased national awareness for public captioning or spurring the passage of similar laws in other cities.

Because public televisions in restaurants and bars tend to be tuned to live events, especially sports and news programming, the negative reactions to captioning from hearing viewers may at least be partly rooted in one or more usability problems:

- Live captions are delayed five to seven seconds, making it harder for hearing and hard of hearing viewers to reconcile reading and listening. Of course, this problem is mitigated when the volume is muted, which is common in bars and restaurants.

- Live captions are more likely to contain errors because the speech sounds are being transcribed on the spot by a remote stenocaptioner.

- Live captions are displayed in roll-up style, which is harder to process for readers than pop-on style. (On the differences between these styles of captioning, see Bond, 2018.)

- Live captions are set in all capital letters, which is standard for the equipment that supports live captioning, but may make extended reading more difficult or novel for viewers accustomed to sentence case.

- Live and recorded captions may block the action on the sports field, which is perhaps the biggest complaint from hearing viewers who don't support legislating public captioning.

Public access isn't a simple issue. Televisions may be muted in restaurants and bars, or set at a low volume, which affects access differently depending on the type of programming. A non-captioned sports program may be more accessible for sighted patrons (regardless of hearing ability) than a news programing with talking heads. Nevertheless, live sports and news programming rarely depict captioning in the best possible light and complicate our efforts to advocate for universal design.

Despite the limitations of live captioning, public captioning remains an important issue for captioning advocates. The National Association of the Deaf (NAD) (n.d.) puts public captioning on a short list of ongoing advocacy concerns for the organization: "The display of closed captions at all times on all televisions located in public places, such as waiting rooms, lounges, restaurants, and passenger terminals." (The NAD's list of ongoing advocacy issues also includes: full captioning for television commercials and live programming, and "access to emergency broadcasts.") I support efforts to make captioning a more visible and desirable feature of audiovisual texts. I want to think more broadly about what it means to advocate for public captioning and how we might increase the desirability of captioning for diverse publics. In an ideal world, captioning becomes not only a more common experience for all viewers but an expected and even normal feature of audiovisual texts instead of only an accommodation for a specific audience.

In terms of enhanced and experimental captioning, let me offer one small way we can increase the visibility and normalcy of captioning. This intervention is simple but potentially powerful: when television sets or computer screens are shown on TV or in a movie (e.g., a character is shown watching TV), captioning should be enabled on these embedded screens. Let's call this type of intervention meta captioning, which is the process of hard coding captions onto the screens displayed inside the screens we are watching. The result is two sets of captions: the traditional track of closed captioning that can be turned on or off, and the integrated or retrofitted caption track that is layered onto the video track to mimic a caption track for the embedded screen. This second track needs to be painstakingly added in post-production because to my knowledge televisions are rarely shown with captioning when they are embedded in the scene we are watching. An exception can be found in a scene from Viral:

In this clip from Viral, a 2016 zombie movie, a television set is shown briefly in the background of the scene. The embedded television screen displays the growing patient count from the virus as an on-screen graphic. Captions appear in pseudo-live style at the bottom of the embedded television screen: "FIRST RESPONDER...pandemic threat. Symptoms." Source: Netflix.

Meta captioning isn't always intended to be read. For example, a movie character may pass quickly by a shop window where televisions are being advertised for sale, or a television program may be shown briefly in the background of a living room or bar scene. There may not be enough time for viewers to read secondary captions displayed briefly in the background. The same captioning guidelines about reading speed or even legibility do not necessarily apply in these examples because meta captioning will not always convey (or be intended to convey) semantic meanings to viewers. Instead, what meta captioning conveys and normalizes is the medium of captioning itself, because it serves as a potential reminder of the necessity and affordances of captioning for the millions of people who are deaf and hard of hearing.

I know it may sound strange to be advocating for a form of captioning that isn't always intended to be read or consumed in traditional ways. Meta captioning is disruptive. It highlights the mode of communication (as well as the primary stakeholders: deaf and hard of hearing viewers) over the meanings that are communicated through this mode. Meta captioning disrupts the very idea that captioning is always or simply about specific linguistic meanings.

Experiments with meta captioning

First, a scene from Glitch (2015), an Australian science fiction television series about six people from a small Australian town who rise from the dead without warning or explanation. In this scene from the second episode of the first season ("Am I in Hell?"), Charlie Thompson (Sean Kennan), a young man who died early in the twentieth century before the advent of television, marvels at the moving picture device while another character (played by Emma Booth) tells him what it's called ("It's TV."). In the original version, the television in the scene is not captioned.

Source: Glitch, "Am I in Hell?," 2015. Netflix. Original captions.

For this experiment, I retrofitted the object of Charlie's curiosity, adding captions to the television in the low style we often see on TV (all capital letters, sans serif typeface, white letters on a semi-transparent black background). While I didn't know the name of the cartoon or even precisely what was being said by the animated characters, I listened repeatedly and did my best. My goal was not necessarily to provide access to every speech sound emanating from the TV. (Note that in my experiment the cartoon's speech sounds continue in the background, uncaptioned, when the camera is focused on Charlie.) Nor was my goal to create a second layer of captions that could be consumed easily (or even at all) as semantic statements. Whether anyone can read the captioned words is not the point. Instead, I wanted to suggest what universal design looks like when captioning is normalized. To create the effect, I styled and sized the captions as a series of new text layers, used motion tracking to force the captions to stick to the TV screen when the camera moves, and blurred the final caption to mimic the shift in focus at the end of the clip. Obviously, retrofitting captions (adding them in post-production) is more time consuming and technically challenging than simply turning on the television's captions when a scene is being filmed.

Source: Glitch, "Am I in Hell?," 2015. Netflix. Custom captions were created by the author in Adobe After Effects.

Second, a scene from Last Man Standing, a sitcom on ABC and rebroadcast on Hulu starring Tim Allen as a "blunt 'man's man'" (yes, seriously) whose conservative values create interpersonal conflicts in the home he shares with his spouse and three grown daughters. Allen (as Mike Baxter) runs a video blog for his fictional workplace, Outdoor Man. His videos are presented to us as web videos, complete with ads in the margins and a custom video interface. The interface includes a scroll bar with play and pause functions and plus/minus buttons for volume control (but no caption support in the form of a cc button). In this example ("Stud Muffin"), the viewer sees more and more of the video interface as the camera pans out, including a title below the video in large, decorative letters: "MARRIAGE SAVERS SALE." In the original version, the captions cover the video title, which create problems with color contrast and legibility.

Source: Last Man Standing, "Stud Muffin," 2014. Hulu. Original captions.

For this experiment, I integrated the captions into the web/meta video. As the camera pans out, tracks left, tracks right, pans in, and pans out again, the experimental captions stick to the fictional video interface and resemble (hopefully) web video captions. I also added a cc icon in the lower right corner of the video as a subtle reminder of the necessity of captioning. Because the web video is foregrounded (unlike the Glitch example), these captions are intended to be read. When the captions are moved into the frame of the web video itself, they become part of the web video (rather than an add-on) and, more importantly, resolve the legibility problems in the original.

Source: Last Man Standing, "Stud Muffin," 2014. Hulu. Custom captions and cc icon were created by the author in Adobe After Effects.

Meta captioning, or the practice of foregrounding captioning itself, is a form of advocacy that elevates, integrates, and increases the visibility of captioning.