In Multimodal Discourse, Gunther Kress and Theo van Leeuwen (2001) define multimodal design as “a deliberateness about choosing the modes for representation, and the framing for that representation” (p. 45). While many who create digital environments and texts acknowledge the need for flexibility within systems, such that few web page designers focus exclusively on functionality within just one web browser, for example, there has been far less attention to flexibility at the user level, particularly in providing means for individuals to manipulate texts to enhance their own access.

An example that illustrates the lengths to which some companies go to protect their material from modification is detailed in Katie Ellis and Mike Kent’s (2011) Disability and New Media. In their first chapter, “Universal Design in a Digital World,” they related the story of Dmitry Skylarov, a Russian programmer who was arrested when he came to the United States to demonstrate his Advanced eBook Processor at a technology conference. Skylarov was charged under the auspices of the Digital Media Copyright Act, which “criminalizes technologies which circumvent a program’s access controls” (p. 13). Skylarov’s specific crime? He had created software enabling users to access Adobe eBooks on other platforms, thus potentially infringing on Adobe’s copyrights. The case became of interest to the disability community because it dealt with the “legal ramifications of manipulating data in order to access it” (p. 13), for instance, by converting PDFs into alternative formats readable by screen readers. Ellis and Kent detailed several other examples in which disabled people have had to resort to legal battles in order to persuade companies and organizations to make their websites and online materials available in accessible formats or to enable users to modify them into accessible formats.

Attention to design in multimodal environments has typically privileged a particular set of preferences and modes of working, preferring to respond to complaints rather than anticipate ways that different users might be able to adapt texts and environments to suit their needs and preferences. Put another way, many multimodal texts are not designed with flexible means for manipulating the information at the level of the user. Let’s go back to the example of video content made available online, where primary information is often embedded in the sound of the video, such as on CNN.com and other news sites. These videos are generally not subtitled, nor do the websites on which they can be found provide transcripts. If I want to know what is said on the video, I have to ask or hire someone else to tell me. There is increasing recognition of the need for those who upload videos to include subtitles, and captioned material has been growing in popularity and availability (see, e.g., “Adding and Editing Captions”But an equally important step is to enable users to use technology to subtitle those videos they want to watch even if they are not already subtitled (Automatic Captions in YouTube ![]() , 2009). This technology was still in beta testing at the time we are writing and is far from being accurate. In fact, it is so inaccurate that its inaccuracy is humorously parodied through a genre of “Caption Fail” videos, where individuals run the captioning technology on music videos and other performances and then re-perform those songs with new wording provided by the captions (see, e.g., Christmas Carol Caption Fail

, 2009). This technology was still in beta testing at the time we are writing and is far from being accurate. In fact, it is so inaccurate that its inaccuracy is humorously parodied through a genre of “Caption Fail” videos, where individuals run the captioning technology on music videos and other performances and then re-perform those songs with new wording provided by the captions (see, e.g., Christmas Carol Caption Fail ![]() by Rhettandlink, 2011).

by Rhettandlink, 2011).

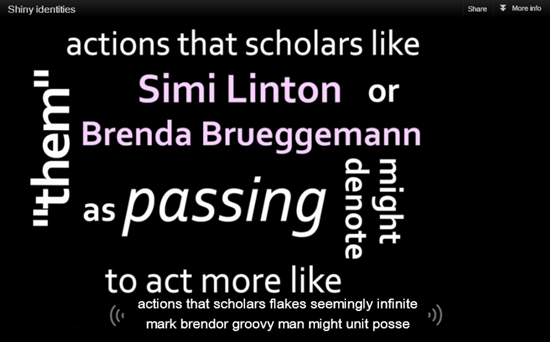

The above image is an example of our own caption fail, a screen shot taken from M. Remi Yergeau's video on shiny identities. In the video, Remi reads aloud from an essay-poem while text dynamically moves across the screen. Because the essay text is embedded in the video itself, Remi did not use YouTube's caption feature. When I turn on the automatic captions, however, garbled text appears.

| Original: actions that scholars like Simi Linton or Brenda Brueggemann might denote as passing | Caption fail: actions that scholars flakes seemingly infinite mark brendor groovy man might unit posse |

We need platforms that enable users to download sound texts and generate automatic transcripts or, better yet, subtitles. We need programs and multimodal tools and resources that enhance users’ ability to move texts into preferred—that is, more usable—media, especially because the variety of users and the range of individual disabilities means that there will be no set guidelines or simple design solutions that will make any content accessible to all users. Far too often, disability is an afterthought rather than considered at the incipient design of a digital text (see Zdenek, 2011). In other words, as a consequence of the way multimodal texts are designed and implemented, they are rarely inclusive or fully accessible. These texts need to be reconsidered both at the level of design (as creators invent and produce different ways of realizing multimodal texts) and at the level of user-centeredness (as users adapt these texts to meet their own preferences for consumption and interaction with others in digital environments).